The first blog post of this three part series was an introduction to Mixed Reality technology. In this chapter, the technical requirements necessary to facilitate the various types of augmented and virtual experiences will be further examined.

The first blog post of this three part series was an introduction to Mixed Reality technology. In this chapter, the technical requirements necessary to facilitate the various types of augmented and virtual experiences will be further examined.

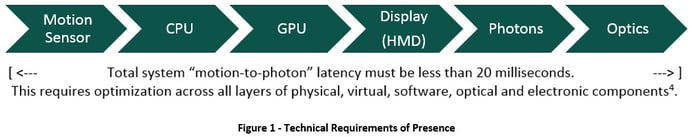

The computing power needed to create a Mixed Reality experience can be extremely demanding. For example, in a Virtual Reality (VR) system the user is completely immersed in an artificial environment that isolates them from the real world. The critical components of this technology are image rendering and motion tracking. When functionally integrated, these systems allow the user to “feel immersed, or present, and the illusion of VR is compelling enough to transport us to another place”3. The key goal of VR is to "[convince] the brain that a computer-generated 3D environment delivered to your eyes via a headset is 'real' -- a concept known as 'presence'"6. In order to convince the brain of ‘presence’, there are some minimum requirements, one such requirement is that from the moment a user makes a gesture or movement in the VR environment the total time allowed to detect this motion and make visible to the user through the display is less than 20ms.

In a virtual environment where these minimal requirements are lacking, the illusion of reality will be compromised. The brain can be very sensitive to invalid sensory input. A sub-par experience will cause the environment and interaction to not look 'right' to our visual system, and can cause “dizziness, disorientation, nausea and headaches”.6

In Augmented Reality (AR) environments where virtual objects are augmented into the real world through overlaid computer information, physical disorientation is less of a problem. This is because the user can still see the real world where everything should behave as expected.

Hardware

The computing hardware requirements for providing an acceptable user experience can typically be achieved by adding a few peripheral components to relatively modern personal computer (PC). Although, in order to receive an exceptional experience, the latest ‘cutting-edge’ computing workstation with high-end graphics processing capabilities and powerful CPU will be necessary.

To reiterate, the goal of Virtual Reality is to convince the user that they are somewhere else. This is accomplished by deceiving the brain, specifically the visual cortex and other parts of the brain that perceive motion. In order to achieve illusion of presence a number of technologies need to be employed.

The Essential Components of ‘Presence’5

- First-person perspective or point-of-view -- all interaction is represented as being ‘seen’ from the eyes of the user

- Wide field-of-view -- the ability to perceive things on the periphery of vision or at minimum, 80 degrees of view

- 3-Dimensional positional audio -- the ability to hear and that the audio is perceived to be accurately positioned within the environment

- 3-Dimensional freedom-of-movement -- the ability to explore the environment around the user

The main ingredient to immersive Virtual Reality is a “persistent 3D visual representation of the experience that conveys a sense of depth”.3 To create the perception of depth, a separate image for each eye is required, one that is slightly offset from the other in-order to simulate the parallax effect. The parallax effect is the “visual phenomenon where our brains perceive depth based on the difference in the apparent position of objects (due to our eyes being slightly apart from each other).”3 Stereoscopic Displays or Head Mounted Displays (HMD) provide this experience through the combination of multiple images. They also use special lenses and realistic optical distortion to produce a stereo image that our eyes perceive as having three-dimensional depth. The system hardware and software need the capacity to render images at a minimum of 60 frames-per-second (fps), but ideally 120fps would be preferred and help eliminate any noticeable latency. Any lag in frame rate will disrupt the illusion and could potentially cause nausea that’s associated with poorly-performing VR. Recent breakthroughs have enabled VR hardware to incorporate high-resolution stereoscopic displays with built-in head-tracking sensors into a lightweight headset that can be purchased for a few hundred dollars.

Input Devices

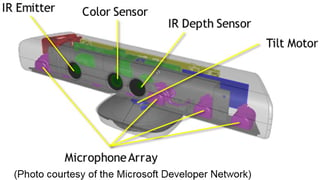

Motion tracking devices such as accelerometers, gyroscopes and other integral components are used to detect body motion. The sensory data that is gathered from these devices is then sent to the software application to be integrated and results in an update to the user’s view of the scene. The Inertial Measurement Unit (IMU) has been a key innovation that’s facilitated rapid head motion tracking. These head-tracking IMU’s use similar technology as those in the average smartphone that combine gyroscope, accelerometer and/or magnetometer hardware to precisely measure changes in motion. These devices must track movement as quickly as possible and the associated software must keep pace and properly combine the data.

In Virtual Reality environments, HMD’s completely enclose the user’s eyes and leaves them blind to the outside world. Although this isn’t necessarily a problem if a standard “game controller” is employed, but if a keyboard and mouse are needed this can present a problem. It’s for this reason that VR is requiring developers to utilize various types of input devices that can ‘visually’ recognize body gestures and motion or spoken commands. Technological advancements have provided low-cost motion input devices to proliferate that require no hand contact, such as the Microsoft Kinect®.

There are also input devices that’ve been specifically created for control in the virtual environment. These controllers, such as the Oculus Touch®, are lightweight, wireless, handheld motion detection devices with tactile controls that often feature a joystick or pad and buttons. Typically sold as a pair and function as mirrors of each other (one for each hand). They’re fully tracked in 3-Dimensional space by a constellation of sensors. The “user sees them in virtual reality responding to their real world counterpart, giving the user the sensation of their hands being present in the virtual space”.1

An enhanced user experience can also be created through the use of haptic devices that utilize various methods to send tactile information back to the user giving the perceived feeling of ‘touch’. Mimicking real world perceptions, tracking movements and updating the rendered scene in real-time is an essential requirement of ‘presence’.

Software

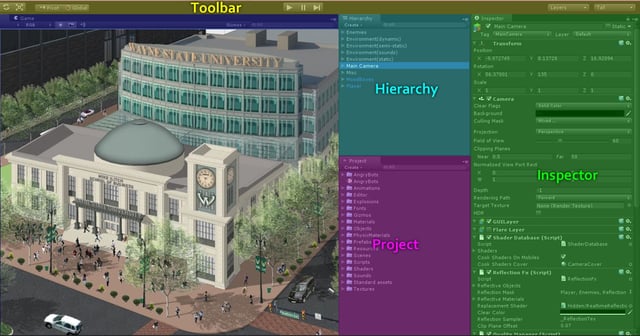

Mixed Reality (MR) content is typically developed via Game Engines that enable enhanced functionality across multiple Operating Systems and hardware platforms. Among the more popular tools are the Unity© and Unreal® game engines for mobile and desktop development due to native MR integration. These environments are also known as middleware and “take care of low-level details of 3D rendering, physics, game behaviors and interfacing to devices”.3 Many developers prefer to build their applications using an Integrated Development Environment (IDE) that come pre-programmed with many easy to use features. Software Development Kits (SDK) can be used to manipulate core platform features but typically only the most experienced programmers interact directly with these tools.

Other content delivery systems include Web-browsers that can be utilized to create and connect Mixed Reality applications. The Virtual Reality Markup Language (VRML) was a standard originally developed in the 90’s for representing 3D interactive vector graphics tailored for the Internet that provided an online VR experience. This format has since been superseded by X3D. This new format is mostly backward-compatible with VRML and routinely used to interchange 3D models and has been accepted as a royalty-free standard by the International Organization for Standardization (ISO) with cooperation agreements in place between the Web3D Consortium (developers of X3D) and the World Wide Web Consortium (W3C). Typically an applet or a plug-in runs “within a web browser and displays content in 3D, using OpenGL 3D graphics technology to display X3D content in several different browsers (IE, Safari, Firefox) across several different operating systems (Windows, Mac OS X, Linux)”.7 A goal of the developers is to have the format become integrated into HTML5 and not need additional components installed in-order to function.

Immersive 360-degree panoramic videos is a class of Virtual Reality technology that’s becoming increasingly popular. Unlike Game Engines where the images are completely synthetic (computer generated), this media utilizes stereo video captured from the real world. Because of the nature of this media, the environment does have limitations in that it isn’t as fully interactive in the same manner as a 3D virtual environment can be. To create a complete panoramic environment, multiple cameras are used to capture a 360-degree view of an entire scene simultaneously. This allows the viewer to choose the angles they wish to watch from, thus providing the sensation of looking around. Facebook has enabled these types of video formats for use in social media and YouTube has a channel dedicated to it.

Check browser compatibility

The key to a rich VR experience is that the computer software and hardware need to be in complete synchronization. Without this, it won’t be immersive and will lack ‘presence’.

The next chapter of the series will examine various ways organizations can leverage this technology.

References:

- Sonakiya, Ankit. “Oculus Rift Release Date March 28 2016” January 8, 2016. Release Date Portal -- http://www.releasedateportal.com/gadgets/oculus-rift-release-date-march-28-2016/

- Lalanne, Denis. Kohlas (Eds.), Jurg. “Human Machine Interaction”. Springer-Verlag Berlin Heidelberg. 2009

- Parisi, Tony. “Learning Virtual Reality” O’reilly. 2015

- dSky9, Inc. “Presence: Technical Requirements”. http://dsky9.com/rift/presence-technical-requirements/

- dSky9, Inc. “Defining Presence: Beyond Immersion”. http://dsky9.com/rift/defining-presence-beyond-immersion/

- McLellan, Charles. “AR and VR: The future of work and play?” February 1, 2016. CBS Interactive. http://www.zdnet.com/article/ar-and-vr-the-future-of-work-and-play/

- Wikimedia Foundation, Inc. “X3D”. https://en.wikipedia.org/wiki/X3D